What is Event-Driven Architecture? Everything You Need To Know

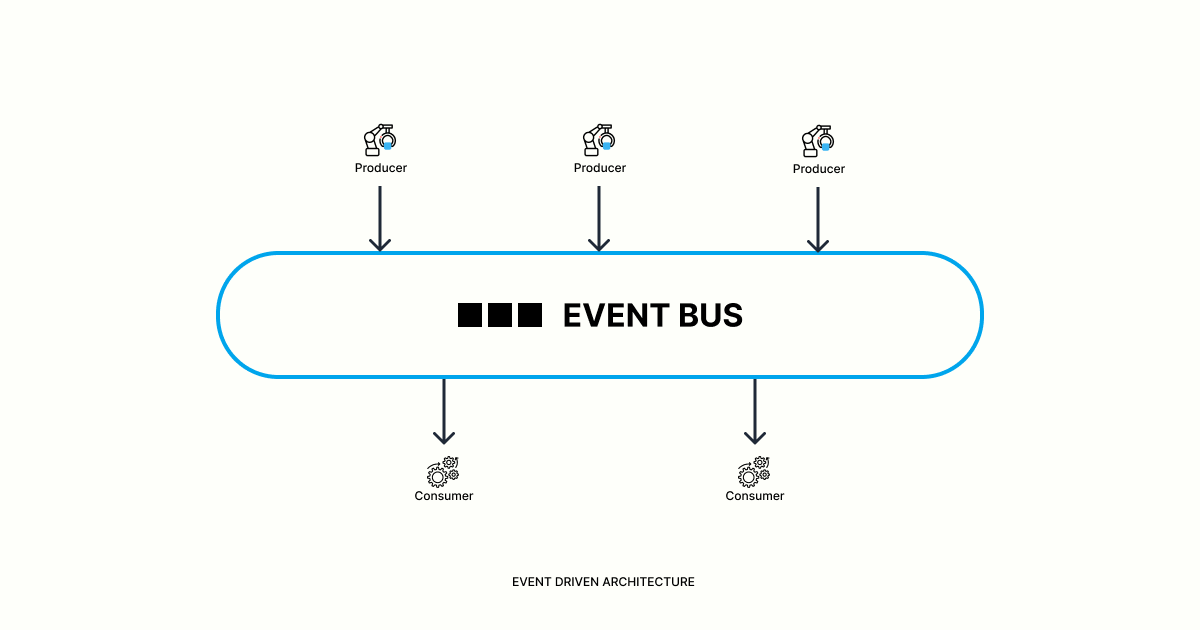

Event-Driven Architecture (EDA) is a software architecture based on a pub/sub model where an event is produced by the producer, stored on the queue and consumed by the consumers as an event stream.

It has three main components i.e producer, event bus/broker and consumer. The three main components are clearly shown in the figure below.

Figure: Event-Driven Architecture

Introduction

In this guide, you will learn about event-driven architecture, its working principle, benefits, examples, etc. This guide will help you gain an idea about the producer-consumer mechanism and the flow of events.

What does an event mean?

An event is a change in state that merits attention from systems. It may be anything like a significant change in state or an update, which is triggered when a user takes an action. For example:- An item being placed in a shopping cart on an e-commerce website.

Event-Driven Architecture and its working mechanism

Event-driven architecture (EDA) is a software design pattern for application development. It allows organizations to track and detect “events” (valuable business moments such as customer transactions) and then instantly act on these events.

EDA is different from a traditional request-driven system, where services needed to wait for a reply before processing the next task. An event-driven architecture is one that has the ability to detect events and react intelligently to them.

Event-driven architecture is an integration model built around the publication, capture, processing, and storage (or persistence) of events. Specifically, when an application or service performs an action or undergoes a change that another application or service might want to know about, it publishes an event—a record of that action or change—that another application or service can consume and process to perform one or more actions in turn.

The event-driven architecture enables a loose coupling between connected applications and services—they can communicate with each other by publishing and consuming events without knowing anything about each other except the event format.

This model offers significant advantages over a request/response architecture (or integration model), in which one application or service must request specific information from another specific application or service that is expecting the specific request.

Event-driven architecture maximizes the potential of cloud-native applications and enables powerful applications technologies, such as real-time analytics and decision support.

In an event-driven architecture, applications act as event producers or event consumers (and often as both).

An event producer transmits an event—in the form of a message—to a broker or some other form of event router, where the event’s chronological order is maintained relative to other events. An event consumer ingests the message—in real-time (as it occurs) or at any other time it wants—and processes the message to trigger another action, workflow, or event of its own.

In a simple example, a banking service might transmit a ‘deposit’ event, which another service at the bank would consume and respond to by writing a deposit to the customer’s statement. But event-driven integrations can also trigger real-time responses based on a complex analysis of huge volumes of data, such as when the ‘event’ of a customer clicking a product on an e-commerce site generates instant product recommendations based on other customers’ purchases.

Event-driven architecture messaging models

There are two basic models for transmitting events in an event-driven architecture.

Event messaging or publish/subscribe

In the event messaging or publish/subscribe model, event consumers subscribe to a class or classes of messages published by event producers. When an event producer publishes an event, the message is sent directly to all subscribers who want to consume it.

Typically, a broker handles the transmission of event messages between publishers and subscribers. The broker receives each event message, translates it if necessary, maintains its order relative to other messages, makes them available to subscribers for consumption, and then deletes them once they are consumed (so that they cannot be consumed again).

Event Streaming

In the event streaming model, event producers publish streams of events to a broker. Event consumers subscribe to the streams, but instead of receiving and consuming every event as it is published, they can step into each stream at any point and consume only the events they want. The key difference here is that the events are retained by the broker even after the consumers have received them.

A data streaming platform, such as Apache Kafka, manages the logging and transmission of tremendous volumes of events at very high throughput (literally trillions of event records per day, in real-time, without performance lag). A streaming platform offers certain characteristics a message broker does not:

- Event persistence: Because consumers may consume events at any time after they are published, event streaming records are persistent—they are maintained for a configurable amount of time, anywhere from fractions of a second to forever. This enables event stream applications to process historical data, as well as real-time data.

- Complex event processing: Like event messaging, event streaming can be used for simple event processing, in which each published event triggers transmission and processing by one or more specific consumers. But, it can also be used for complex event processing, in which event consumers process entire series of events and perform actions based on the result.

Benefits of event-driven architecture

Compared to the request/response application architecture, event-driven architecture offers several advantages and opportunities for developers and organizations:

- Powerful real-time response and analytics: Event streaming enables applications that respond to changing business situations and make predictions and decisions based on all available current and historical data in real time. This has benefits in any number of areas—from processing streams of data generated by various IoT devices to predicting and squashing security threats on the fly, to automating supply chains for optimal efficiency.

- Fault tolerance, scalability, simplified maintenance, versatility, and other benefits of loose coupling: Applications and components in an event-driven article aren’t dependent on each other’s availability; they can be independently updated, tested, and deployed without interruption of service, and when one component goes down a backup can be brought online. Event persistence enables the ‘replaying’ of past events, which can help recover data or functionality if there is an event consumer outage. Components can be scaled easily and independently of each other across the network, and developers can revise or enrich applications and systems by adding and removing event producers and consumers.

- Asynchronous messaging: Event-driven architecture enables components to communicate asynchronously—producers publish event messages, on their own schedule, without waiting for consumers to receive them (or even knowing if consumers received them). In addition to simplifying integration, this improves the application experience for users. A user completing a task in one component can move on to the next task without waiting, regardless of any downstream integrations between that component and others in the system.

What is Request Driven vs Event Driven?

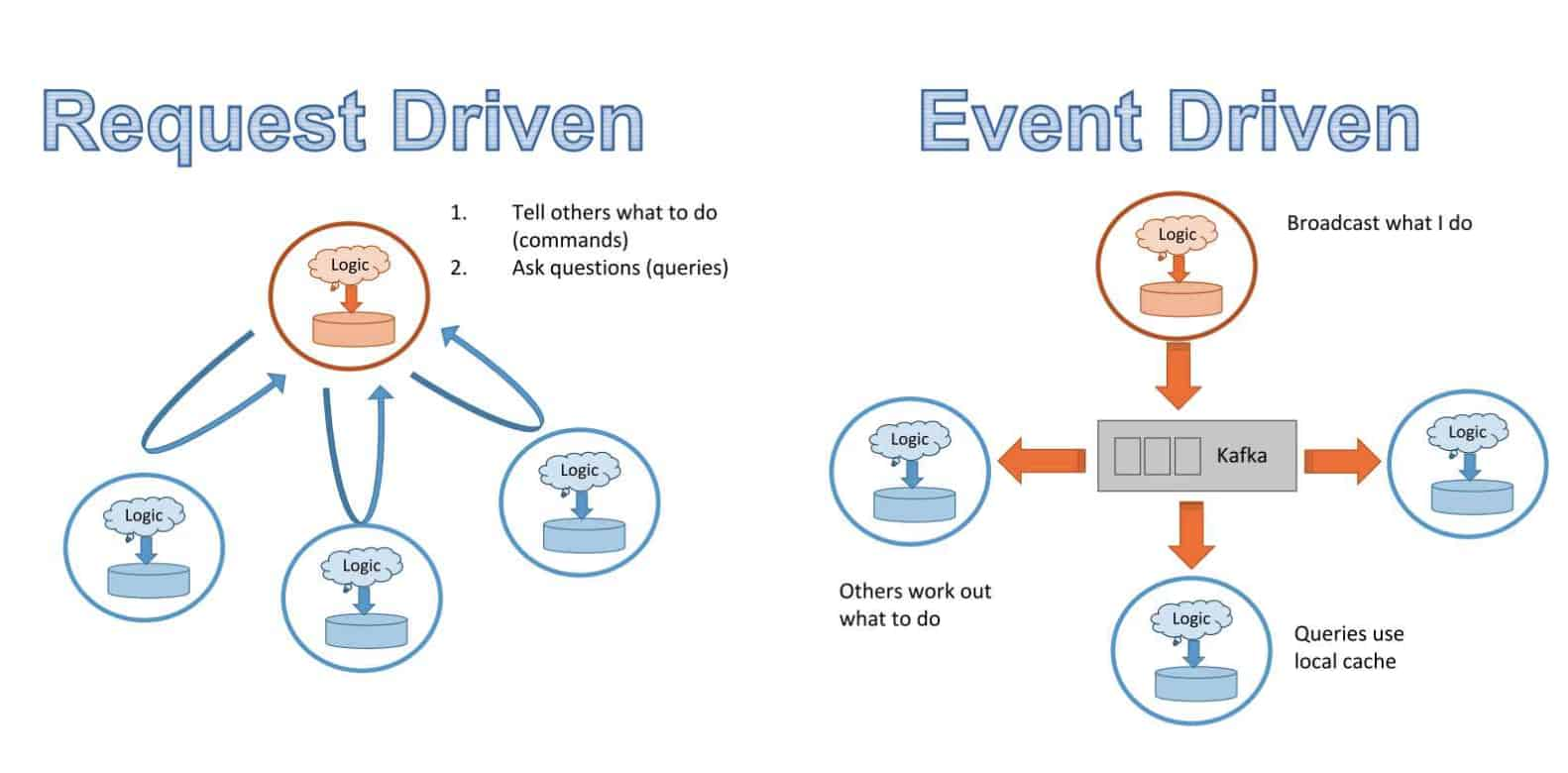

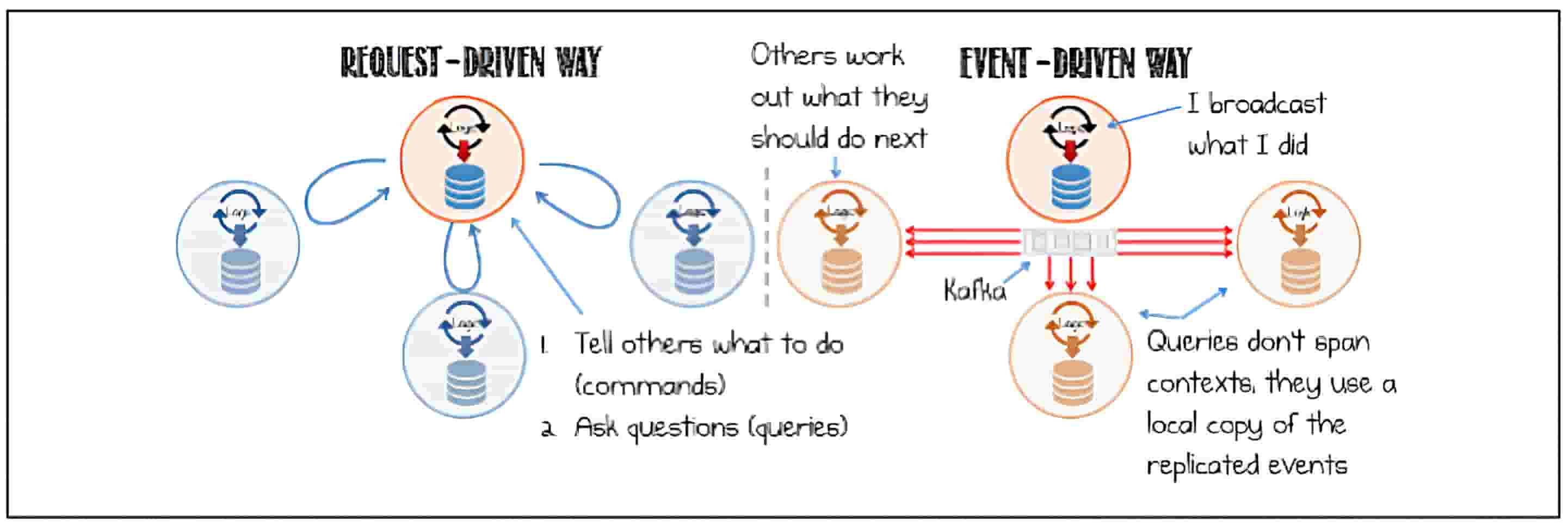

Request-driven vs Event-driven

The software world has gone microservices in the last decade. The idea is to break your business logic into small components – each component is a self-contained service – that can be maintained independently. The owner of each component can update and test that component quickly without having to consult the rest of the system.

Figure: Request Driven vs Event Driven

Microservices often go hand-in-hand with REST, a set of methods that let these microservices communicate. REST APIs are request-driven. A client (service) sends requests to tell its server exactly what to do via methods such as POST and GET, and its server responds with the results. A server has to listen to the request for the request to register.

Figure: Comparison between the request-response and event-driven approaches demonstrating how event-driven approaches provide less coupling.

Because in a request-driven world, data is handled via requests to different services, no one has an overview of how data flows through the entire system. Consider a simple system with 3 services:

- A manages drivers availability

- B manages ride demand

- C predicts the best possible price to show customers each time they request a ride

Because prices depend on availability and demands, service C’s output depends on the outputs from services A and B. First, this system requires inter-service communication: C needs to ping A and B for predictions, A needs to ping B to know whether to mobilize more drivers and ping C to know what price incentive to give them. Second, there’d be no easy way to monitor how changes in A or B logics affect the performance of service C or to map the data flow to debug if service C’s performance suddenly goes down.

With only 3 services, things are already getting complicated. Imagine having hundreds, if not thousands of services like what major Internet companies have. Inter-service communication would blow up. Sending data as JSON blobs over HTTP – the way REST requests are commonly done – is also slow. Inter-service data transfer can become a bottleneck, slowing down the entire system.

Instead of having 20 services ping service A for data, what if whenever an event happens within service A, this event is broadcasted to a stream, and whichever service wants data from A can subscribe to that stream and pick out what it needs? What if there’s a stream all services can broadcast their events and subscribe to? This model is called pub/sub: publish & subscribe. This is what solutions like Kafka allow you to do. Since all data flows through a stream, you can set up a dashboard to monitor your data and its transformation across your system. Because it’s based on events broadcasted by services, this architecture is event-driven.

Request-driven architecture works well for systems that rely more on logic than on data. Event-driven architecture works better for systems that are data-heavy.

Event-driven architecture and microservices

In microservices—a foundational cloud-native application architecture—applications are assembled from loosely coupled, independently deployable services. The main benefits of microservices are essentially the benefits of loose coupling—ease of maintenance, the flexibility of deployment, independent scalability, and fault tolerance.

Not surprisingly, event-driven architecture is widely considered the best practice for microservices implementations. Microservices can communicate with each other using REST APIs. But REST, a request/response integration model, undermines many of the benefits of the loosely coupled microservices architecture by forcing a synchronous, tightly coupled integration between the microservices.

Some of the Most Common Event-Driven Architecture Tools

- Apache Kafka: Apache Kafka is an open-source distributed event streaming platform used by thousands of companies for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications. It is an open-source system developed by the Apache Software Foundation and written in Java and Scala.

- Amazon Kinesis: Amazon Kinesis is a real-time data processing platform provided by Amazon Web Services. Amazon Kinesis makes it easy to collect, process, and analyze real-time, streaming data so you can get timely insights and react quickly to new information. Amazon Kinesis offers key capabilities to cost-effectively process streaming data at any scale, along with the flexibility to choose the tools that best suit the requirements of your application.

- RabbitMQ: RabbitMQ is an open-source message-broker software that originally implemented the Advanced Message Queuing Protocol and has since been extended with a plug-in architecture to support Streaming Text Oriented Messaging Protocol, MQ Telemetry Transport, and other protocols.

- Apache Pulsar: Apache Pulsar is a cloud-native, multi-tenant, high-performance solution for server-to-server messaging and queuing built on the publisher-subscribe (pub-sub) pattern. Pulsar combines the best features of a traditional messaging system like RabbitMQ with those of a pub-sub system like Apache Kafka – scaling up or down dynamically without downtime. It's used by thousands of companies for high-performance data pipelines, microservices, instant messaging, data integrations, and more.

References

- [1]: Event-driven architecture. In Wikipedia. https://en.wikipedia.org/wiki/Event-driven_architecture

- [2]: Event-Driven Architecture. (n.d.). Amazon Web Services, Inc. https://aws.amazon.com/event-driven-architecture

- [3]: Education, I. C. Event-Driven Architecture. IBM. https://www.ibm.com/cloud/learn/event-driven-architecture